AI Conversationalists Showdown: Comparing ChatGPT, Perplexity, and Claude

AI Conversationalists Showdown: Comparing ChatGPT, Perplexity, and Claude

Key Takeaways

- ChatGPT, Claude, and Perplexity all use different language models (LLMs) to process and respond to prompts, with Perplexity offering the most varied choice of LLMs.

- ChatGPT is available worldwide, while Claude is available in English-speaking countries and Perplexity is available worldwide except in the EU. Pricing varies but starts at $20 per month.

- ChatGPT has a token limit of 4,096 (8,192 for GPT-4), Claude has an input limit of 200,000 tokens, and Perplexity’s token limits are not published yet.

Though ChatGPT is the world’s most popular AI chatbot, there are plenty of great alternatives out there, including Perplexity and Claude. But what do each of these three AI chatbots offer, and which is best for you?

ChatGPT vs. Claude vs. Perplexity: A Quick Comparison

It’s important to understand the basics of ChatGPT, Claude, and Perplexity before we get into the deeper details. Note that we’ll be comparing ChatGPT and Perplexity to Claude 2.1, the newest version of Claude that replaced its predecessor.

| | ChatGPT | Claude | Perplexity | |

| —————– | ——————————————————————- | ———————————————— | ——————————————————————————————— |

| Availability | Worldwide (excl. North Korea, Iran, China, Cuba, Syria, and Nepal). | US, UK, Canada, Ireland, Australia, New Zealand. | Worldwide (excl. EU countries). |

| LLM Used | GPT-3.5 and GPT-4. | Claude | Perplexity: GPT-3.5 Perplexity Pro: GPT-4, Claude |

| Price | GPT-3.5: Free. GPT-4: $20/month. | Claude: Free. Claude Pro: $20/month. | Perplexity: Free Perplexity Pro: $20/month | $200/year |

| Training Data | Up to September 2021. | Up to early 2023. | Perplexity: Up to September 2021 with GPT-3.5. Perplexity Pro: varies depending on LLM used. |

| Token Limit | GPT-3.5: 4,096 tokens. GPT-4: 8,192 tokens. | 200,000 tokens. | Varies depending on LLM used. |

Now, let’s dive into the specifics of these three AI chatbot tools.

1. LLMs Used

Hannah Stryker / How-To Geek

AI chatbots rely on large language models (LLMs) to process and respond to prompts. While ChatGPT and Claude use their own original LLMs, Perplexity offers a more varied choice.

ChatGPT uses GPT-3.5 and GPT-4 . If you’re using the free version of ChatGPT, you’ll be interacting with GPT-3.5. But if you’re using ChatGPT Plus, OpenAI’s paid chatbot version, you’ll be interacting with GPT-4.

Claude, created by Anthropic, uses its most recent LLM version, Claude 2.1. Unlike GPT-3.5 and GPT-4, which are both still available, Claude 2.1 has entirely replaced previous versions of Claude.

Perplexity is a little different. This is an AI chatbot platform that gives you access to multiple LLMs. The free version of Perplexity lets you access GPT-3.5 in addition to its Copilot feature. Be sure not to confuse this Copilot with Microsoft’s AI chatbot that goes by the same name. On Perplexity’s platform, Copilot is a GPT-4-powered internet search tool that was launched in May 2023.

The Pro version gives you access to GPT-4 and Claude, too. It’s important to note that there is no original Perplexity LLM. The platform uses preexisting LLMs, not its own.

2. Availability and Price

ChatGPT is available in most countries worldwide, though it has been banned in North Korea, China, Iran, Cuba, Syria and Nepal. You can use ChatGPT for free, which gives you access to GPT-3.5, or you can use ChatGPT Plus . This premium version gives you access to OpenAI’s latest LLM version, GPT-4, for $20 monthly.

Claude is available in predominantly English-speaking countries, including the US, UK, Canada, Australia, Ireland, and New Zealand. A Claude account is entirely free and gives you access to Anthropic’s latest LLM version, Claude 2.1. Anthropic does offer a premium plan, known as Claude Pro, which offers 5x more usage for $20 monthly.

Perplexity is available in most countries worldwide, though it is not available in the EU. Perplexity’s free version gives you access to GPT-3.5 and the Copilot search feature, while Perplexity Pro also gives you access to GPT-4 and Claude, and gives you more usage for Copilot, for $20 monthly or $200 annually.

3. Token Limits

Hannah Stryker / How-To Geek

On AI chatbot platforms, your input and output text is made up of tokens, which can be phrases, words, individual characters, segments of code, and more, depending on the LLM in use and its “tokenization” method. Since the number of tokens affects the computational costs for the platform, you typically have an input and output limit. These limits refer to the number of tokens the chatbot will process in your prompt and respond with in a single conversation, respectively.

When using the free version of ChatGPT, the overall token limit is 4,096 tokens, with input and output being split evenly. Therefore, the input and output limits for GPT-3.5 are both 2,048 tokens. GPT-4, on the other hand, doubles the token limit to 8,192, with the input and output limit being 4,096 each.

Claude, on the other hand, has a much larger input limit of 200,000 tokens, allowing you to have much longer conversations with the Claude 2.1 LLM. However, the output limit is only 2,000 tokens, which is worth keeping in mind.

Unfortunately, Perplexity’s official token limits for its chatbots are yet to be published at the time of writing. However, Perplexity’s token limit for Copilot searches alone is 2,000 tokens. Perplexity’s basic version also only allows five Copilot requests every four hours. If you upgrade to Perplexity Pro, this shoots up to over 300 requests per day.

4. Data Accuracy and Hallucinations

While using any LLM, it’s important to be on the look out for two things: inaccuracy and hallucination.

Since AI chatbots have entered the mainstream, there have been concerns about the accuracy of their responses. In a study conducted by Stanford University and UC Berkeley , it was found that ChatGPT’s accuracy was worsening over time, so there is a legitimate issue here.

While you can use AI chatbots to check grammar, write cover letters, or even create poems, you can also use them for factual information, such as a historical fact, political definition, or literary summary. This is where inaccuracy can pose an issue.

ChatGPT, Claude, and Perplexity all stand the chance of providing false information. This isn’t intentional, and can come from biased or inaccurate resources used in training. Because there’s no dedicated list of training data for these LLMs, it’s impossible to know how much of the training data is unreliable. Therefore, the best thing to do is fact-check any information given by an LLM via another source.

ChatGPT has shown that it can hallucinate in the past, as discussed in an NCBI study . Anthropic has also stated on its own website that Claude can hallucinate. Therefore, whether you’re using Claude or one of the GPT versions on Perplexity, it’s important to remember that hallucination is possible.

5. Conversational Abilities

LLMs are designed to interact with users in a human-like manner. This involves understanding context and nuance, keeping up with additional prompts or parameters, and responding with natural language. So, how do ChatGPT, Claude, and Perplexity measure up here?

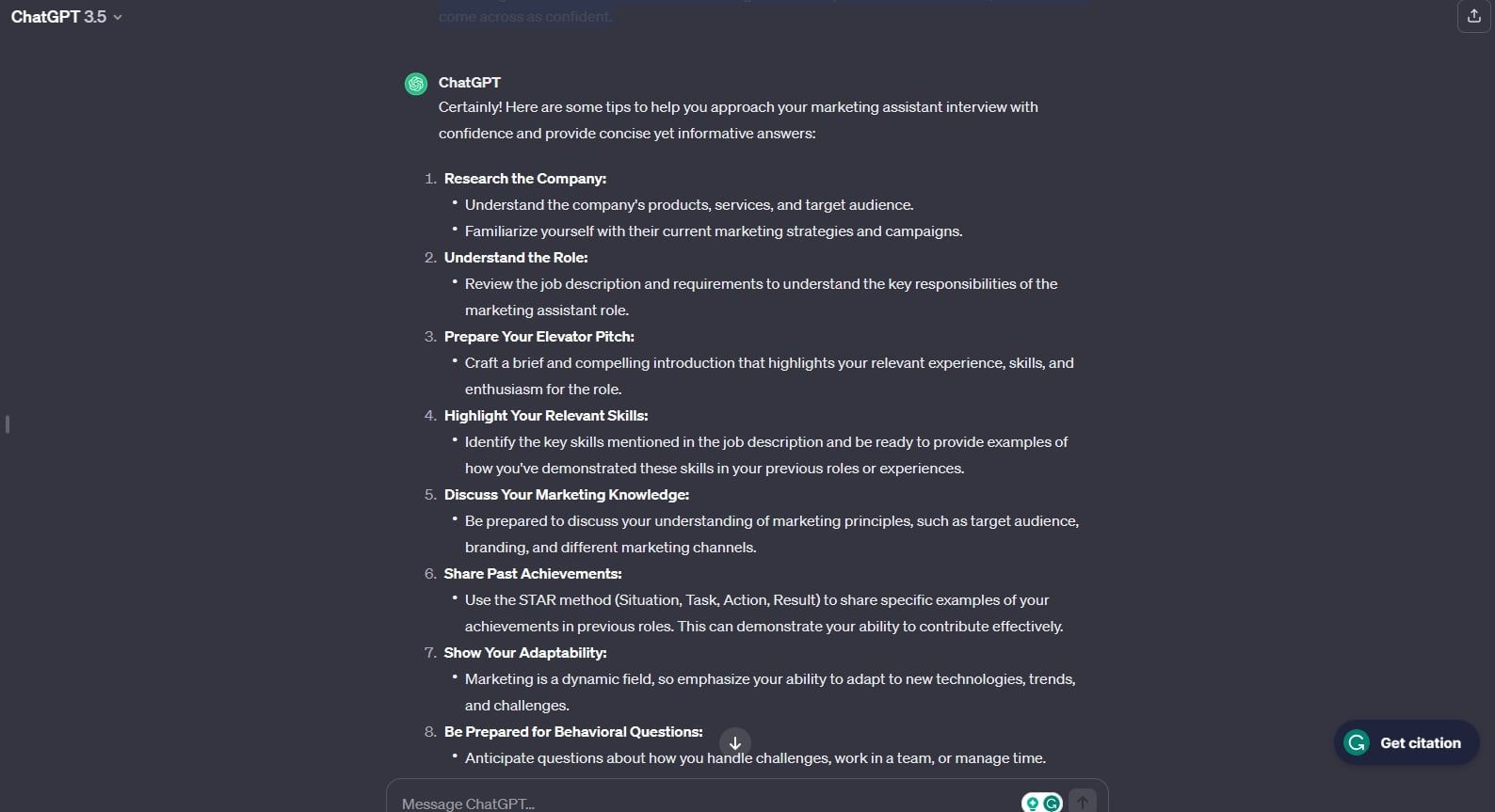

Oftentimes, AI chatbots will provide you with bulleted lists if your prompts are quite loaded. We tested this on ChatGPT-3.5 and Claude using the following prompt:

I need some advice. Can you tell me the best way to approach an interview for a marketing assistant? The interviewer is looking for concise yet informative answers, and I want to come across as confident.

These are the two responses we got from each chatbot. Here are ChatGPT’s results:

ChatGPT gave a longer response with more bullet points and information. The language was very conversational and natural.

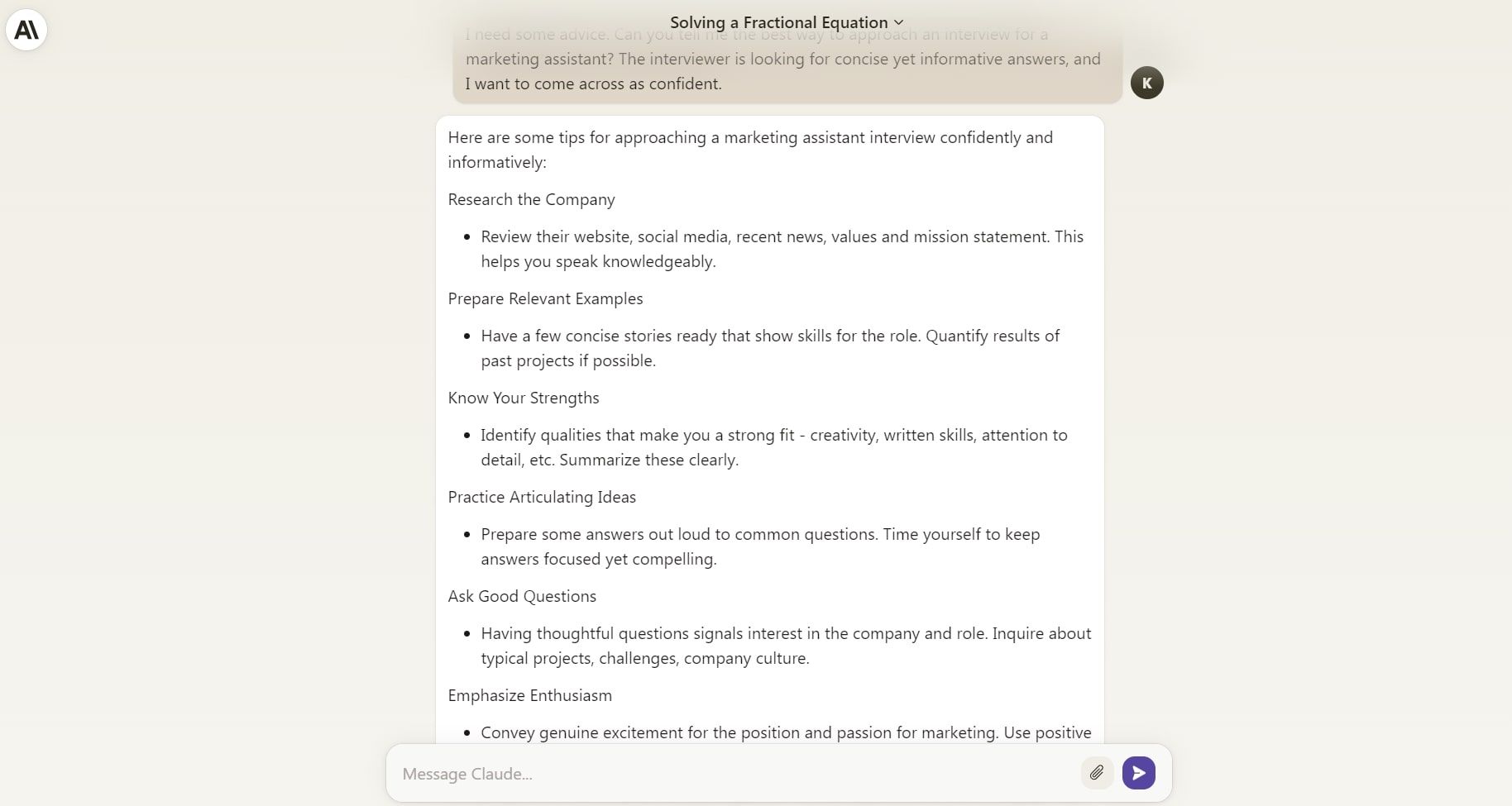

Here are the results from Claude:

Though Claude gave a shorter response, the language used was just as conversational and natural as that provided by ChatGPT.

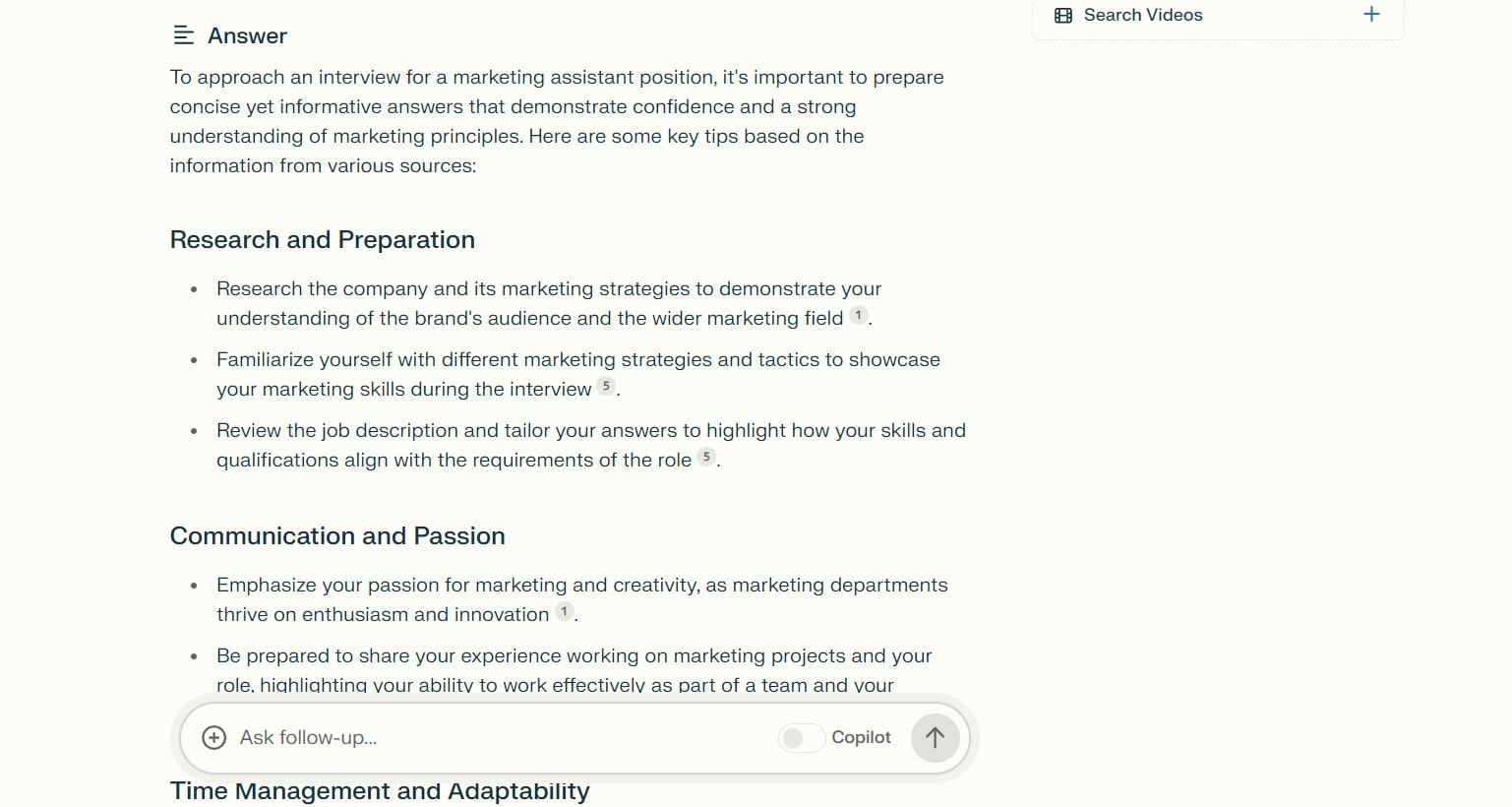

We also asked Perplexity the same question while using the GPT-3.5 LLM to see how close the answer would be to the ChatGPT response.

Perplexity’s conversational abilities rely on the LLM being used, and GPT-3.5 is what you’ll be dealing with if you’re using the free version. Again, Perplexity responded with natural and conversational language, and also provided citations for its points, which can be very useful for fact-checking and further research.

Evidently, the GPT-3.5 responses differed on ChatGPT and Perplexity, which is important to note. Some similar points were touched upon, but you certainly won’t be getting identical responses on both platforms.

6. Math and Coding Abilities

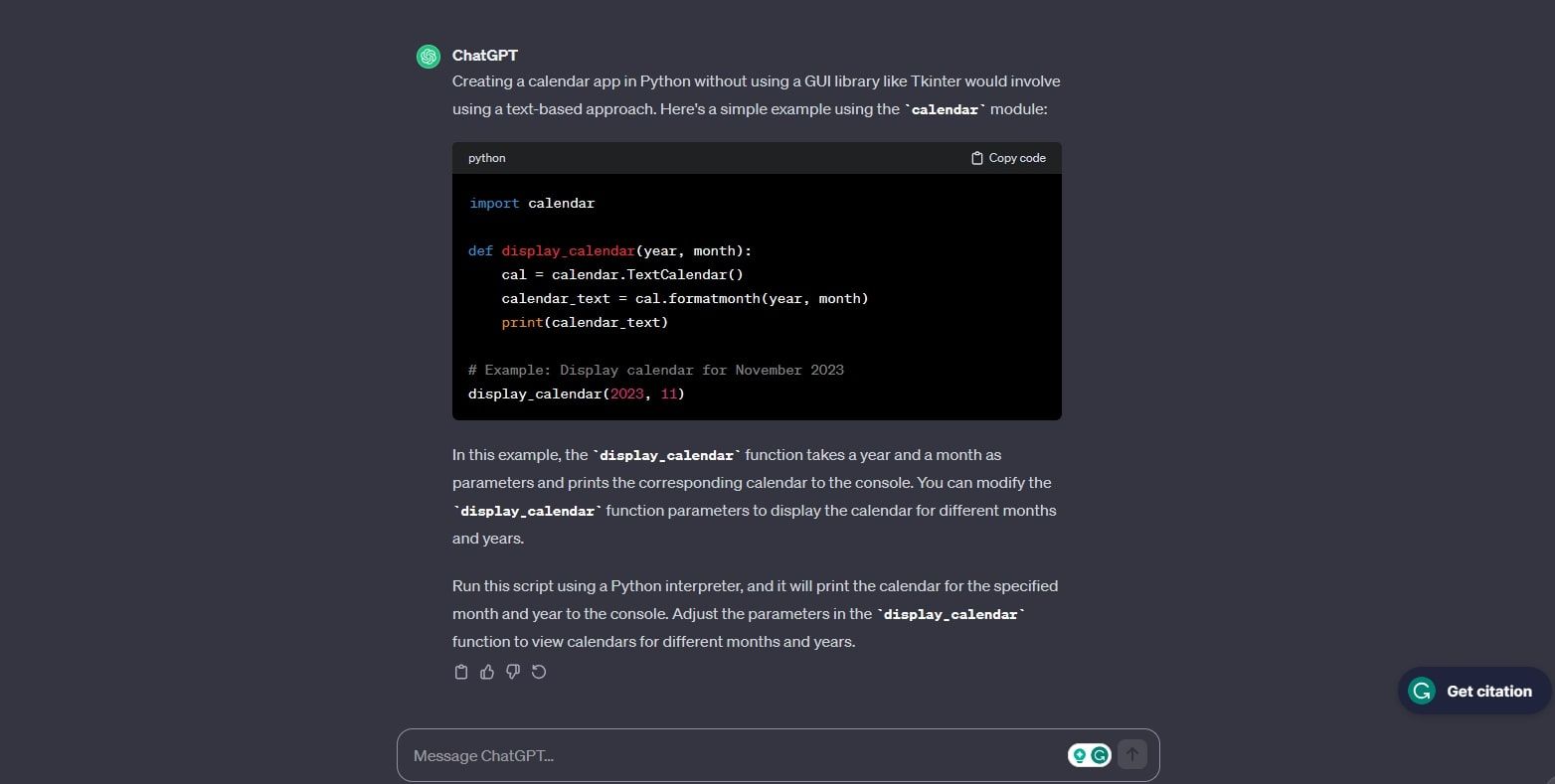

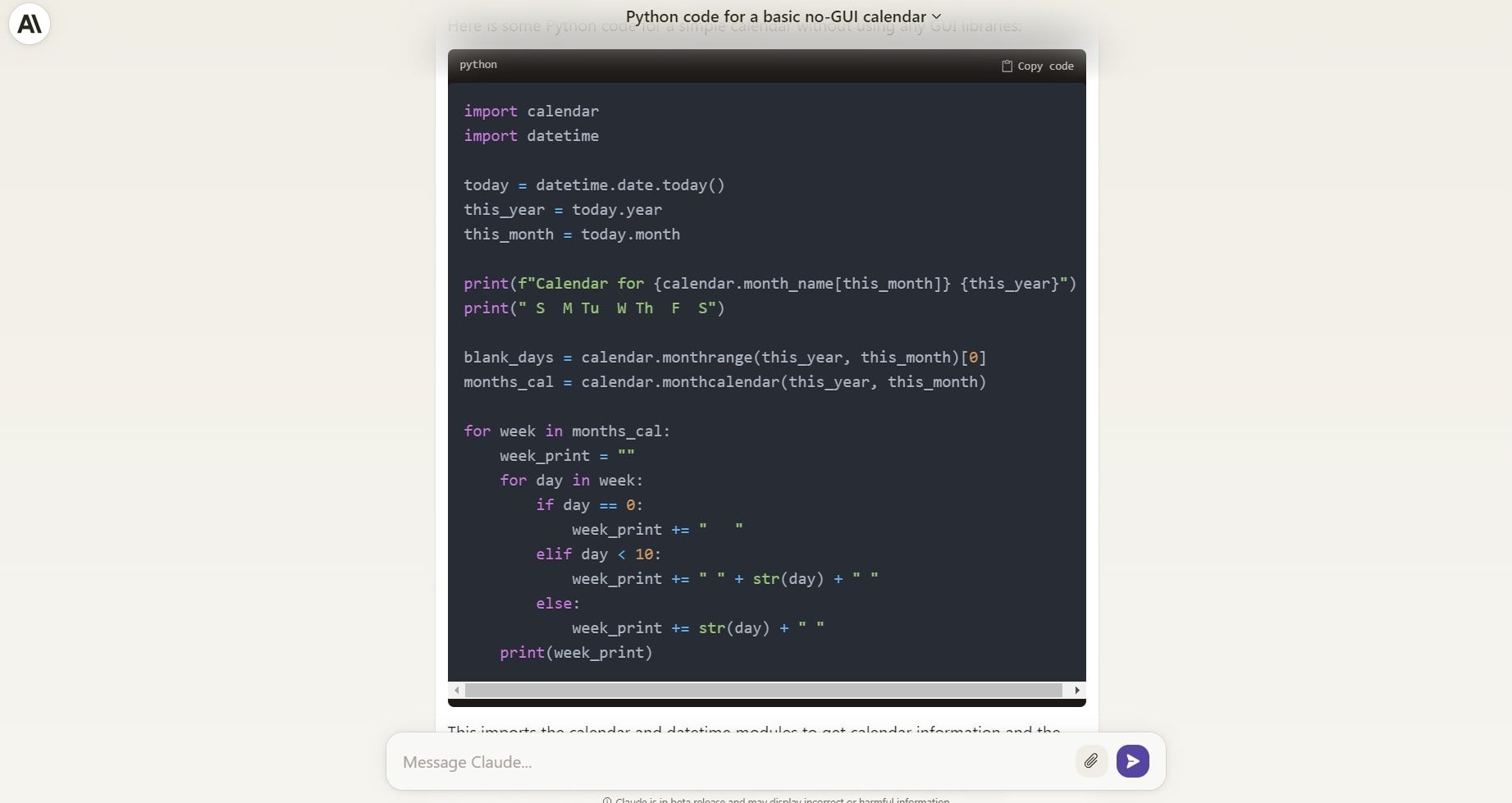

GPT-3.5, GPT-4, and Claude are not designed to write highly complex code, but you can still use them for simpler coding support. To test this, we asked all three chatbot platforms to provide simple Python code for a desktop calendar program without using a GUI library.

Here’s what we got from ChatGPT:

ChatGPT provided a brief but effective code excerpt which worked successfully when tested, providing a simple text-based Python monthly calendar.

From Claude, we got the following result:

Claude gave a slightly longer code excerpt that provided the same result when tested.

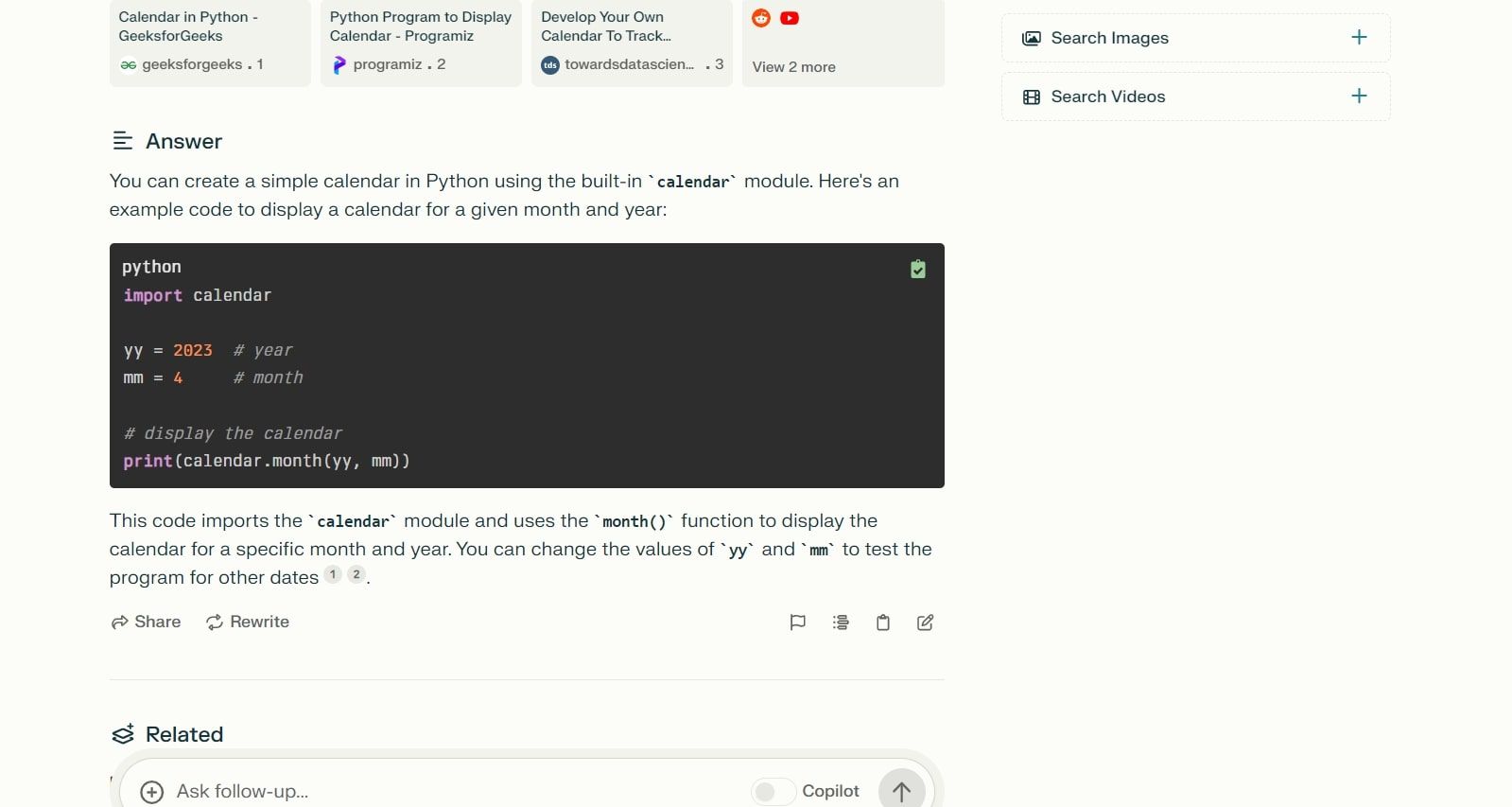

Here’s Perplexity’s response:

Perplexity also provided a code excerpt that worked successfully when tested, again giving the same result.

Mathematics

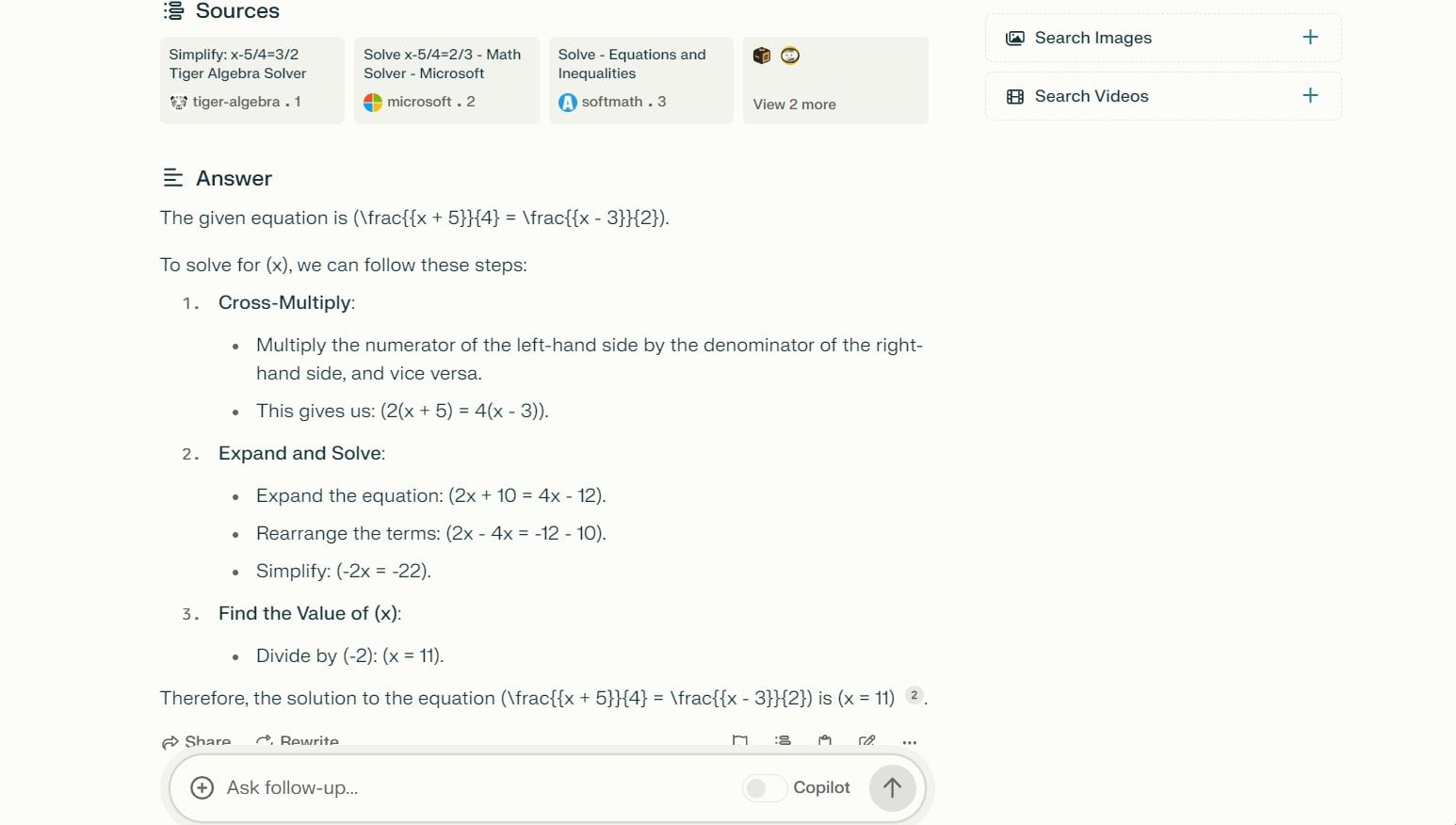

In terms of mathematics, we provided all three platforms with an algebraic problem: (x + 5) / 4 = (x -3) / 2. This isn’t a simple equation, but a fairly difficult problem that students may come across.

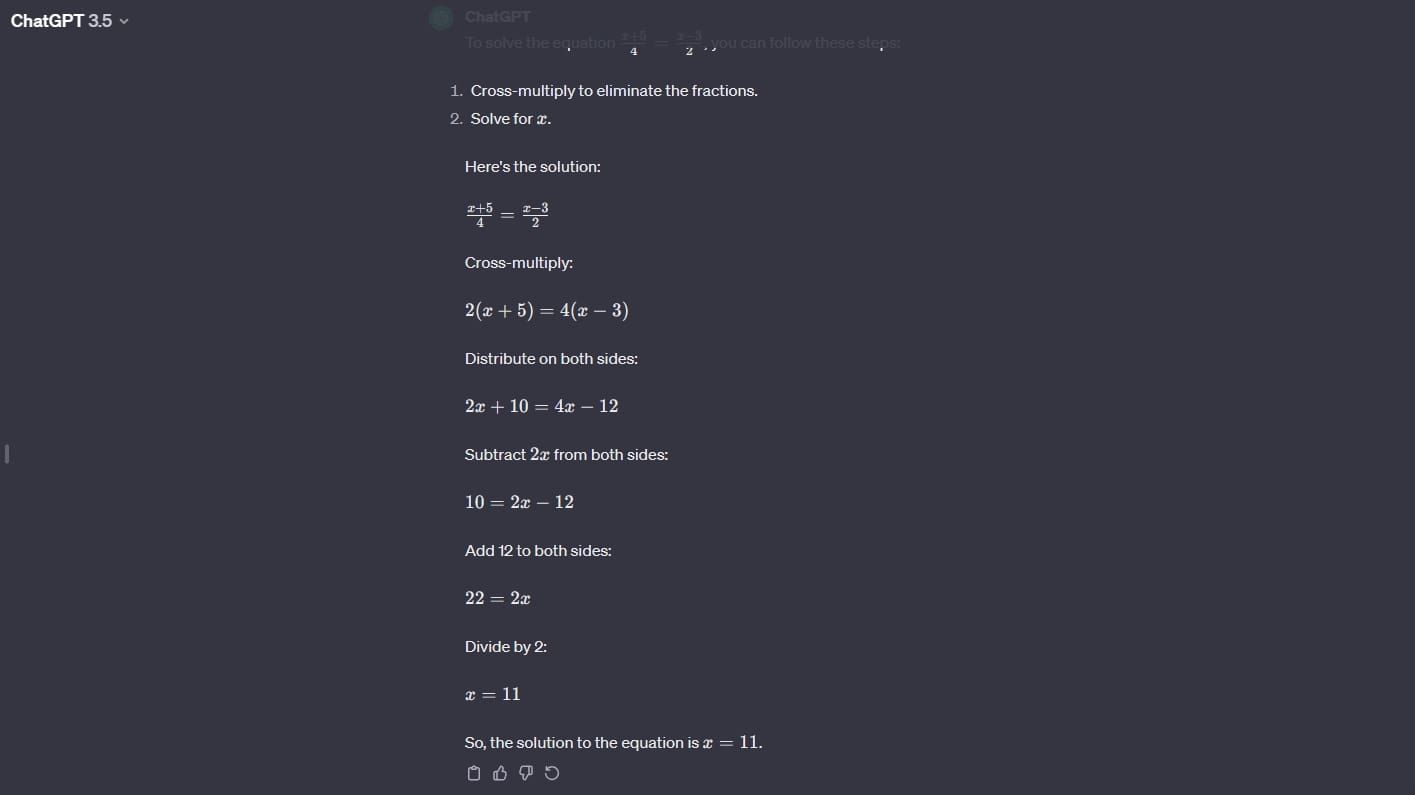

Here’s the response we receieved from ChatGPT:

ChatGPT gave the correct answer, and demonstrated how to solve the equation.

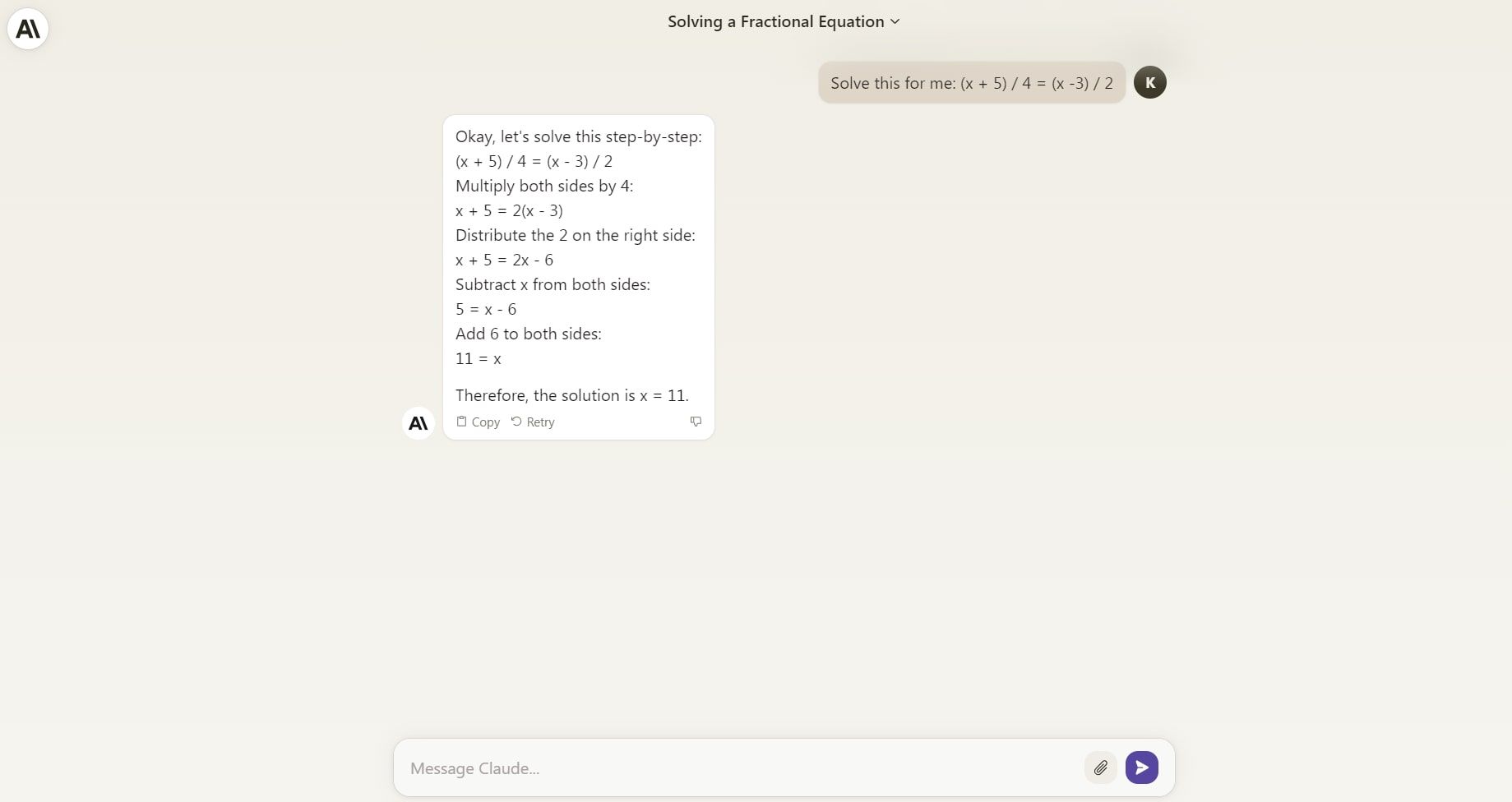

Here’s Claude response:

Claude also gave the correct answer, along with the solution process.

Finally, we got the below response from Perplexity:

Perplexity also gave the correct answer, as well as the solution process.

It’s important to note that no AI chatbot should be totally trusted with math equations, as they do have room for error. Check out our piece on why you shouldn’t use ChatGPT for mathematics to learn more.

Choosing an AI Chatbot Can Be Challenging

There are so many AI chatbot platforms out there today that it can be difficult to choose the best one for you. In the end, selecting the right chatbot comes down to your budget, intended use, and location. Consider the factors above if you’re trying to choose between ChatGPT, Claude, and Perplexity.

Also read:

- [New] In 2024, Pro Video Recording Methods for Multiple Systems

- How to Easily Hard reset my Oppo Find X7 Ultra | Dr.fone

- In 2024, 4 Feasible Ways to Fake Location on Facebook For your Xiaomi Redmi K70 Pro | Dr.fone

- In 2024, Sky's Dynamic Range Wonders - Top 10 Sites Guide

- In 2024, The Beginner's Guide to YouTube Image Sharing

- Mastering Snapshits Pro Photography Edits Guide for 2024

- Simple ways to get lost messages back from Itel S23+

- Simple ways to get lost messages back from Motorola Moto G84 5G

- Undeleted lost videos from Motorola Moto G 5G (2023)

- Unlock Your Xiaomi Redmi 13C Phone with Ease The 3 Best Lock Screen Removal Tools

- Useful ways that can help to effectively recover deleted files from Honor

- What Legendaries Are In Pokemon Platinum On Honor 80 Pro Straight Screen Edition? | Dr.fone

- Title: AI Conversationalists Showdown: Comparing ChatGPT, Perplexity, and Claude

- Author: Ian

- Created at : 2025-02-12 16:06:27

- Updated at : 2025-02-19 19:02:40

- Link: https://techidaily.com/ai-conversationalists-showdown-comparing-chatgpt-perplexity-and-claude/

- License: This work is licensed under CC BY-NC-SA 4.0.